Brains, societies, and semantic spaces

When spacetime itself becomes smart

[VIDEO]

"..when we are asked to care for an object, when that object thrives under our care, we experience that object as intelligent, but, more importantly, we feel ourselves to be in a relationship with it."

--Sherry Turkle, Alone Together

As we drift into a society that offers us increasing personal independence, one that's both cheered along and equipped by technologies that eliminate our direct dependence on one another---as countries and districts pull away from cooperation over fears of losing their sense of a unique identity, replaced by narrow tribal affiliations to sports teams and special interest groups---so we dare to call our technological creations `smart'.

Meanwhile, in technology, we talk about software defined everything: a new generation of infrastructure that is more plastic and adaptable, like the Amazon cloud where machines come and go transparently, and technology bends to our will---we say it `just works', because it complies with unquestioning immediacy, even as it rules our expectations. We tend to think that it is the infusion of programmability into all the things that makes them smart solutions, because obedient responsiveness mimics intelligent characteristics, and we want to have our hands in the machine training it and guiding it like a pet, giving it our approval for its compliance, even to the detriment of scalablity.

To business, smart has come to mean `application centric', where a just-in-time menu of tailored user opportunties places customers into the role of judge. It seems that our broadly curated view of smart is, in fact, one of having our way.

The brains that realize this universal obedience are hidden from view, but do we truly believe that tools and infrastructures are becoming smart, or are they merely offering a wider range of obedience options for selection by smart users?

For many engineers and practitioners, these are dumb questions: the goal is just to make something work as quickly and cheaply as possible; but, for town planners, building designers and software architects, these are questions we need to be asking, questions that delve into the very nature and viability of our future society. In this note, I want to compare what we mean by `smart' with the technologies and processes that matter to us, now and in the future. I will suggest that we cannot understand what smart is without understanding scale (which is different from scalablity). We might need to rethink what we believe smart is. If smart is simple and generic, can we engineer it into everything? What would that mean? Could we live in a truly smart world?

Brains and societies - centralization or decentralization?

In technology, we often mimic our brain-centric view of the world to cope with our challenges (see The Brain Horizon). We invent technologies as an extension of our own agency, gluing together parts like a robotic exoskeleton with superpowers. After all, our brains are smart, right? Brains are the source of our intelligence. Brains are centralized, and, like a controller model, they handle feedback loops.

This self-centric view of intelligence makes some important points. Brain (central) models of organisms have some advantages:

- Mixing perspectives. By collating information from an entire set of sensor-actuators it can mix and correlate the experiences, to favour innovation, or creative thinking across the entire system. A brain can thus adapt the behaviour of the entire organism, in a coordinated way. But this is only helpful, if it is fast enough: centralization also means contention over shared resources (in this case the brain's processing capabilities). We trade poorer dynamics for enhanced semantics.

- Speed and specialization. The brain has to work much faster than the system it is controlling, else it will not happen `just in time'. As organisms get bigger, they tend to get slower for this reason (also because the transport of energy around the system from a centralized heart and lungs is bottlenecked). This means any brain is limited by its network to the outside, and it has to expend a lot more energy than the space it controls, because all the activity is being focused into a small region.

Decentralized systems, by contrast, can be self-sufficient at every point, without any central dependency. They are robust to failure, and their feedback loops are short, so they can react very quickly without the expense of a superior brain, by reflex. They don't have the `God's eye view' of everything, and so they cannot innovate about the behaviour of the composite system. They are stuck in basically the same pattern, adapting only to what each component can see in situ. We think of them as dumb.

Now it gets confusing. Some `dumb' systems exhibit smart behaviour (where `dumb' means the opposite of `smart' here): the immune system is a good example that I have used many times over the years. It exhibits what we understand as deductive and inductive reasoning, selecting friend from foe, and adapts to challenges fast enough, but it is almost completely decentralized. Moreover, looked at through the microscope, the brain is just a homogenous lump of cells, so what makes it smarter than say a kidney or a heart?

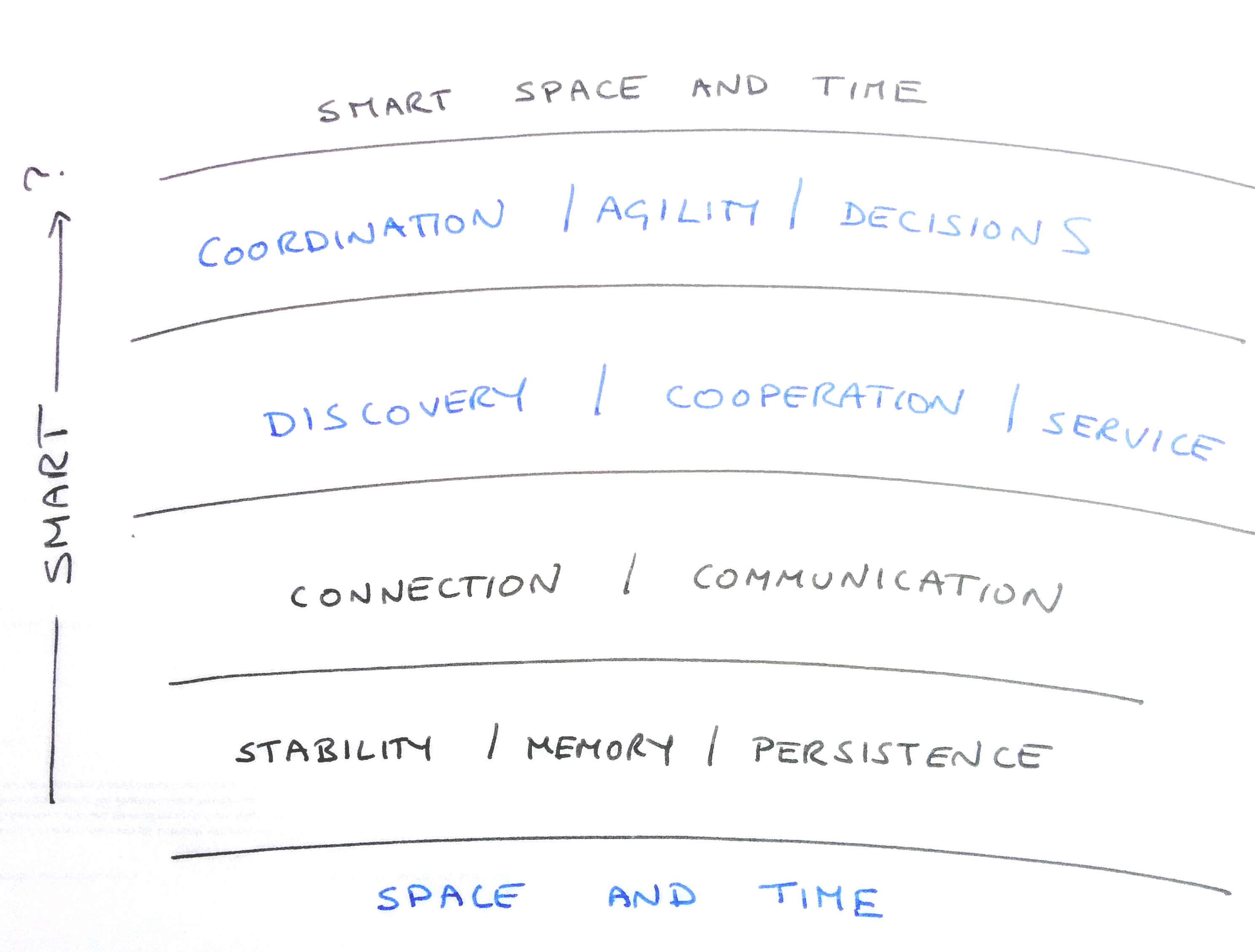

`Smart' is clearly a matter of perspective. It is about the scale at which we observe the world. The same is true of `centralized'. There can be many locally centralized focal points in a system, not only a single enormous point, in some remote datacentre. A central brain could be your smartphone, in your pocket, or embedded as a proxy, like a substation.

In IT and software engineering, we tend to do what service providers tell us to do. If market forces are selling space in a datacentre, obviously everything has to be built around datacentres. If the latest thing is cloud computing by API, then that's what we do. We get locked into such paradigms precisely because we simply go with the flow. What we need to ask outselves is whether we are using centralization for its functional characteristics, or merely as an imitation of own sense of agency.

Smart is fit for purpose

So what do we mean by smart, and why would we want it? Setting aside the question of obedience for a moment, our handed-down presumption is `that which is smart requires a brain' (preferably dominated by ours), i.e. some central intelligence. This is what we associate with the organisms of the natural world. As a result, we try to imitate human intelligence using algorithms and processes, localized inside machinery that is mechanical or computational, but determined by an indentifiable `control centre'. Ironically, one we've finished bottling these qualities, these automata, acting as proxies for our own intelligence, seem very dumb to us. That's because they don't adapt to context or have much awareness of state. Algorithms (what we mean by computation) usually freeze intent and context, so they only seem smart if they are mixed and matched on demand, with a human operating the levers. Intelligence is clearly about more than computation.

Next, we look for artificial intelligence by trawling `big data', and hope to discover interesting archaeological finds, something we missed with our limited human faculties. This is what a brain does, on a smaller optimized timescale. But, when we find it, this doesn't look smart either, because the wisdom distilled from the results takes too long to apply to a timescale that is different from the here-and-now. At best the insights can be used for long term planning, or future innovation.

This tells us that there are two aspects to what we think of as smart:

- We perceive an action as helpful in a given context (fit for our present purpose).

- The action arrives `just in time' to be useful in that same context (anyone can come up with something too late).

So, smart is something to do with exhibiting a certain plasticity to challenges, and innovating quickly around them, with approximate goals. In promise theory language, we could define smart like this:

Smart: an assessment made about a person, device, or agency that provides a time-saving or value generating service to cope quickly and cheaply with a situation unfamiliar to the assessor.

Notice the economic language to emphasize the role of relative cost. Also notice the role of changing circumstances. What this suggests is that nothing (or no one) is unequivocally smart, only smart in a given context. It also seems that certain technologies could improve those abilities. This seems about right, so let's stick with that.

Smart is just in time

We call someone `quick thinking' if we think they are smart, so let's look at timescales:

If we overlay some technologies on top of this, by the timescales at which they serve, we can see how they look smart and dumb from the perspectives of these different timescales.

We used to think that IT Wizards were very smart, because they knew so much and could fix any problem over a timescale of days and weeks. Now manual work seems hopelessly foolhardy. CFEngine looked smart for managing systems on a timescale of minutes, days and weeks, but it looks slow and stupid at the speed of an operating system or cloud controller. Kubernetes applies almost exactly the same bottled feedback process or `reasoning' to cloud controllers, but applied with greater speed over a wider area. It looks smart relative to that timescale.

If we translate the classic two-state model of state management (MTBF/MTTR) into an adaptive challenge/response (where `failure' just means promise not kept, or expectations not met) then we see where systems live on this chart. These technologies seem to be smart because they are implementing human reasoning at longer timescales over much shorter timescales, by brute force.

Larger size makes everything relatively dumber

Now, look at what scaling up does to a system:

As a system grows, without substantial changes to infrastructure, the time to process longer and longer feedback loops grows (latency and workload). So it needs to work faster and faster to maintain the same expectations. As expectations seek faster behaviour, each previous solution seems relatively dumber. So, unless we can scale up everything in proportion, by decreasing response time, (known as dynamical similarity), our perception of the system will change. In a centralized system, the challenge is the latency (and risk) associated with transporting information, plus the ability to pack sufficient processing into a central brain.

We make a system faster (at fixed technology) by making it dumber and more decentralized!

This dependence on scale should not be a surprise. At the cellular level, a brain is a decentralized, homogeneous mass. If we examine a few cells of a brain, we do not think it is smart. If we examine a thousand brains in a room, we don't experience the same level of smart behaviour as when interacting with a single brain. This tells us that we need to be looking at the right scale to perceive smart behaviour.

Images borrowed online.

In the picture above, the left hand side is run by a reasoning brain, with a mechanical assistant: it's scalability is limited and requires massive centralized effort. The right hand side is completely automated, with no centralized reasoning; the solution is fast and efficient, but not adaptive (its reasoning is built into its form). The left hand side is fighting to impose its own scale; the right hand side is trying to be scale invariant. In the right hand side, the space itself has become the representation of functional behaviour. To scale up, the smart thing to do is to give up the very things we believe smart represents: reasoning and questioning of every detail, and replace it by dumb predictability.

Brains are societies of cells, and societies can have brain-like behaviour, but according to very different observers. Intelligence is a matter of scale and perspective. Trying to force intelligence from one scale into a larger or smaller scale by `mimicking with machinery'

Are we building smart systems or dumb systems? The right question to ask is: at what scale?

Dumb emergent systems can make apparently smart decisions (like the immune system), as long as there is match of timescales. It does not depend necessarily on centralization, because the information still gets mixed by infrastructure. (We call this gossiping in technology).

We are left with a question: is smart a good thing, or a bad thing? To scale society's operation, we don't want too much of it. To innovate, we want a lot of it in the right places. It needs to be fit for purpose.

What can we learn from societies and cities?

Recently I became interested in the study of cities, based on some research by the Santa Fe Institute (see Smarter Spaces), and how they scale in terms of their achievements relative to their size. Cities are spaces that have evolved from defensive postures into functionally centralized cauldrons for mixing ideas and services. We sometimes talk about melting pots, where cultures meet. Studies by researchers have revealed how infrastructure plays a key role in enabling these processes in a very generic way.

- Innovation (messy mixing) is a key output of community networks that integrate diversity. Communities flocked together, with herd safety in mind, and only later collaboration as smarter hunter-gatherer groups. Cities started with defense, but a pleasant side effect of couping people up was mixing of ideas and rapid innovation (compare western wartime development with the decentralized agrarian society in Asia).

- Isolation (tidy separation) is important for specialization,

concentration and gestation of ideas. So one needs to be able to

switch the network on and off intentionally to appear smart.

Our beliefs about separation of concerns may need revisiting to understand smart behaviour.

- Efficiencies of scale come about from shared infrastructure. If the infrastructure does not keep pace with the needs of its clients, the network breaks up into smaller pieces. Centralization is a source of contention, so innovation often results in swings from centralization (contention but optimal innovation) to decentralization (no contention but poor innovation).

Centralization brings a humanized view, and we identify a human view as a smart view, because people relate to individuals, centralized points of service, singular identities, an agents as the intentional sense of control. This is a side effect of our lives as organisms ruled by brains. But we are also painfully aware of how trying to scale individuality (by queueing) has limitations.

To scale up, efficiently, we can use decentralization for the dehumanized processes (automation), where we no longer need or want to have an explicit relationship. The collective system can still be `emergently smart' (at a larger/slower scale), but often we hardwire a particular train of reasoning into a machine, deliberately making it `dumb' to avoid the inefficiency of rethinking.

When we accept `standard lore', best practice, or `do it because everyone else does it', we have turned ourselves into machines of our own making. This is efficient, but is it smart?

Smart beyond reason

What we call reasoning plays a major role in what we think of as smart. But the myth of `reasoning' is held in too high a regard by modern philosophy. From a promise theory perspective, it is just a process of search and selection over larger reservoirs of ideas. The ability to locate ideas and recombine them into new ideas is facilitated by infrastructure (it acts as a catalyst, a meeting point for different parts in a puzzle). Moreover, supporting infrastructure can be attached to agents themselves: the human brain is capable of storing experiences in memory, so we don't have to thrash everything out over a whiteboard in realtime from the beginning of language for every conversation we have. Memory allows us to solve problems alone in the library, or in the shower, long after the experiences during which we accumulated the idea fragments. Memory or `learning' allows us to embed smart processes anywhere and at any scale, without massive centralization.

Management books (written by extroverts) like to tell us that we need to work in teams to be smart. This is false. Humans are not dumb agents that can only improve their capabilities by direct interaction. We can pick up ideas and experience over many years, cache them in memory, and piece them all together quietly in the privacy of our own thoughts at any moment.

By studying the promise theory view of smart processes, it is possible to identify the universal characteristics of reasoning and scale them. I call this concept semantic spacetime, and have developed the idea both to understand existing intelligence, and to develop technology. Broad functional components are represented as scaled `superagents', and their effective promises. They have to be compatible to be connectable. If they are not, then a logical story will not work. It's not that hard to understand.

With technology, we often try to force reasoning into the timescale of our choosing, with brute-force controllers; but, by doing so, we pre-select a particular solution pathway, and shut down all the others. This closed mind approach can lead to blind alleys, so adaptive innovation needs constant and expensive rethinking.

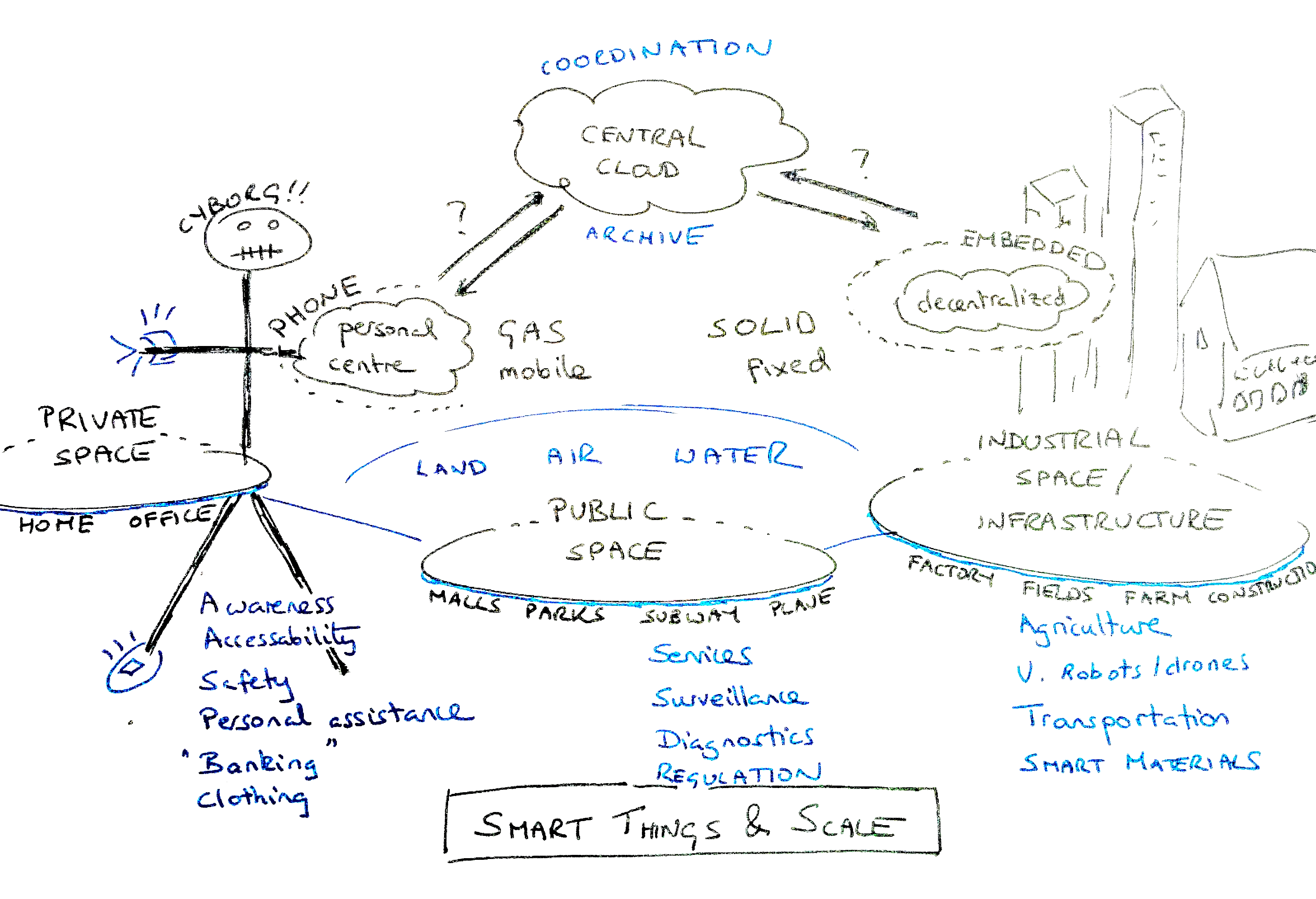

An Internet Of Things?

Right now we are going through a transformation, implanting prosthetic IT across all of society, in the hope of finding an application. This is a business opportunity, not the result of careful planning, so it is entering the world more like an epidemic than an act of design. This is just part of the messy process of innovation. We are combining genes, and waiting for `selection'. It says nothing about how successful it will be. We have smart phones to extend our bodies, smart cars to extend our transport, smart buildings to extend our working or living environments, and smart cities to extend our societies. One day we might have smart governance too!

Our present assumption is that connecting a world together into a network will somehow make it smarter. That much is surely true, assuming we can identify the right scales. We know that there is a relationship between networks and innovation. Services and data analytics are the two obvious areas where IoT can contribute to smart spaces. What use is that? What do we think smart is?

- Enable adaptive innovation at the scale of society.

- Enable surveillance at any scale.

- Divine post hoc stories about the world at any scale.

- Identify patterns/similarities, for potential reuse (discovery) at any scale.

The possibilities seem limitless, if we are willing to bear the cost.

The user experience: software and automation are the opposite of smart

What we are really trying to interconnect, though technology, is the larger human-computer network of our day to day lives. In one sense, this is the same journey we began with information technology in the 1980s, by putting computers into everyone's homes, and with the development of an intuitive user experience (UX) design. The economics of IT networks has since brought cloud centralization for economy of scale (a classic early stage optimization), even as the more mature telephone network has done the opposite. From a technology perspective, we look to infrastructure and services to help us where we are, with increasing intolerance of communication latency.

Centralized cloud datacentres are today, but we need to be careful not to trap ourselves at one particular scale (like the tractor, not seeing the wood for the trees). Centralized cloud services and analytics can still help to make a giant analytic `brain', with a slow adaptive feedback loop, but will it be smart in context, and just in time? Locally decentralized service is better for many of the dumb cases. There are many shades in between, if we bother to understand them.

Marketing uses the term `smart' to mean `Intel inside'---and the popular `software is eating the world' argument suggests (to the delight of software developers) that software is what makes the world smarter. Yet, implanting information technology into familiar contexts, and then connecting it all up, is not enough. If we just put information networks into everything, it will not become smart. Making it programmable doesn't make a device smart either, because it still needs a human to intervene and perform the adaptation. Software is as smart as training your dog to fetch the newspaper, building a vending machine, or learning a piece of music by heart. Even humans, when working in collaboration, deliberately forego intelligent reasoning to give ourselves to the task. Automation is the opposite of smart, because smart has no value once innovation is set aside, and distribution of the result is the important task. That is not a value judgement: reasoning in the wrong context is just a bottleneck.

Our software designs are still primitive, and too low-level, to describe (smart) infrastructure at the scale of society. We are missing an abstraction that makes contextualized functional behaviour scalable. We need to get away from thinking in APIs where everyone is wiring up their own hobby board applications in what we call `cloud', to make a more generic platform that supports human ideas of cooperation. I call this abstraction `workspaces'.

Smart spaces - generalizing all the smartness once and for all

Why not skip over specific technologies altogether, and use the power of abstraction to help us unify our view? Can we commoditize space and time directly? In terms of infrastructure, this means better sharing and reusability. Functional spaces can be made smart by allowing the resource usage to adapt and self-optimize in situ: automated warehouses that move popular items close to the access points, automated resource managers that move processes or data around for efficient access (a resource scheduler e.g. like a next generation Kubernetes), etc. Why does a cluster node have to be in datacentre rack, why could it not be a controller in someone's house? There is also the notion of multi-tenancy to handle separation and mixing (cloud controllers). To make it efficient, we need to separate the space from the occupant, else it will cost too much to re-use (container tools like Docker). This is not always the right answer. A rented office space in a high rise block is not well suited as a concert hall or opera house. So, some spaces (that persist over long timescales) make sense of permanent installation (see the semantic spacetime project).

To evolve smart systems for a context of smart everything, we need to break down the artificial technology barriers and cached reasoning we've built up in present paradigms, and look beyond to what is universal in context.

Are containment and multi-tenancy, themselves, enablers of smart behaviour? The formation of cells, in biology, is a scaling strategy for limiting scale (like broadcast zones), which avoids undue centralization, and enables the dumb form of emergent intelligence. They may increase adaptive efficiency, by enabling re-use (time sharing or space sharing), which in turn leads to an economy of scale (exploiting what we call `statelessness' of the infrastructure, and disposability). This is reflected in the way we share resources in both space (storage, reasoning) and time (networking, reasoning). We call them time division multiplexing, and channel multiplexing in data communications. To be a smart space, it needs tenants to fill it with promises that can be mixed and matched. Is smart IT infrastructure about software? Software is the language that expresses behaviours, but what really makes something smart is whether it adapts to context in a timely fashion.

Buildings, homes, cities...

The concept of smart homes has been around for thirty years now, but we are still only just learning what we want from it.

What could a smart city mean? Is it a technologically, software driven city? Giving it API buttons to push doesn't make it smart; giving it fixed algorithms doesn't make it smart. But those things could be involved in networking human-machine expertise at appropriate scales. Everything about infrastructure applies to the city and vice versa. But a city is not just about machine efficiency, or information readiness; it is about the soul of the people within it too. Our expectations of human life go beyond pure economics. A smart city enables humans to be happy and improve their lives.

Cities and colonies formed from herds, and encampments as defensive postures, but quickly proved to be cauldrons for innovation. If you put people in close quarters, with shared infrastructure, there are economies of scale, better facilities, and a lot of sharing of ideas.

If we focus on small technological devices, instead of on human trajectories, we will miss the opportunity to make technology serve humanity rather than the other way around. There is a tendency to idolize the performance of the economy as the motivation for all things. The economy exists to make us happy. In these times where we are questioning the very nature of our future lives, presently defined through work, we shall need to confront the balance between efficiency and fulfillment (experiences, states of being, travel, escape).

Things that make us feel good are often the opposite of efficient. They are pedagogical, even indulgent (like expensive coffee).

What does this tell us about the future ofsmart infrastructure? It can be

- An enabler, extending our natural limitations

- A crutch for helping us to see exceed our limitations.

But human desires are limited by our brains, and their cognitive limitations. IT cannot help us to manage relationships, unless we give up (outsource) those relationships by delegation. Whether we give it up to another person, or to a machine makes little different to us, but it might mean a lot to our neighbours.

Is smart city about a big controller?

Machine, Machine Messiah

The mindless search for a higher

Controller, take me to the fire

And hold me, show me the strength

Of your singular eye...

--Yes, Machine Messiah

The IT world continues to turn to centralized controllers as a first option, because developers generally start with small prototypes and then ask someone else to scale them. But we must understand how dynamics and semantics can scale together. If we concentrate a dense network into a small space, with high speed, then a process of reasoning can act as a controller, because it pays to transport signals and data from actuators and sensors to this brain. But that strategy doesn't scale in a number of ways:

- Latency to act quickly accumulates over the distance from actor to controller.

- The energy to sustain the processing and transport increases in cost, and communication breaches preferred containers.

Here is a lesson from the natural world: autonomy favours speed,

centralization favours coordination, while aggregation favours

innovation and problem solving. So we end up with the idea that the

source of `smart' intelligence is really network patterns and

relative speed.

Final words: to the software engineers and the town planners...

What is a smart society, a smart system? Smart is about successfully exploiting scale and context to adapt to (or exceed) our expectations. It might be centralized or decentralized. Smart is not just fast or efficient, but something that removes barriers in our human desire for exploration and reasoning. It benefits from rich information, but that is not a sufficient condition. Let's start building a real understanding of those concepts into how we design and manage functional spaces at all scales.

Human limitations drive us to think centrally, in communities and identities. If the attributes that make something smart are about networks: mixing, isolation, adaptation to context, and scale, then we can humanize that by embedding aspects of smart behaviour at every scale, from a human community level to international logistics and relations. This suggests the generalized concept of semantic spacetime.

In information technology, we have some interesting developments around software containerization and so-called process orchestration. I believe we need a more mature platform abstraction (what I am calling `workspaces') to act as an umbrella for these cases, to get beyond the REST API and microservice scale.

If society replaces certain human interactions with embedded IT services, at the scale of an individual human life, we can speed up the pace of life for individuals, and even free some service providers from a life of drudgery, but we will not make society more fit for purpose---mainly because we are on a threshold of uncertainty about what that purpose really is. Seeking complete autonomy without the check or comment of peers may simply make us insufferably spoiled. However, if we can bring new services to the places they are most needed, clearly we can quench the desire for both speed and improved relevance in one go, giving people the illusion of greater autonomy (relying on scalable proxy machinery instead of direct human cooperation). Relying on dumb infrastructure can make a smart process smarter at a higher scale.

We can speed up the world, but there are no short cuts to the localized knowledge that makes something fit for purpose. If an commodity expert system told you a commodity answer, why would you trust it for your specific context? Following a GPS is harmless and generic, tested across a population, again and again. Diagnosing a unique local problem is a different matter entirely.

Due diligence, in our interactions with the world, remains the difference between smart trusted knowledge and dumb information, and trust is a purely human issue: it cannot be accelerated by artificial means. Ultimately, smart exists for human well being. Let's think some more about that.

Sun Mar 27 12:25:33 CEST 2016

See also the work on semantic spaces, and workspaces.

See also